By Reuven Harrison

The Kubernetes API server is a central component of Kubernetes. It allows cluster admins and other clients to manage and monitor the cluster through a rich set of APIs that can fetch and update the cluster state. It is therefore essential to secure the API server against attack. This post discusses network-level protection mechanisms that you can apply to the API server. For application-level protection (authn, authz and more) see this article.

Where Does the API Server Run?

The API server runs on a dedicated node called the Kubernetes Master. A cluster may have one or more such masters.

On some Kubernetes platforms (minikube, OpenShift and other self-managed environments) you can run this command on the master to see the API server:

ps -ef | grep apiserverManaged Kubernetes clusters such as GKE, EKS and AKS don’t allow you to access the master by ssh but you can still access the API server running on it through https.

For security reasons, the API server should be the only externally exposed process on the master and it should be listening on a secure port (TLS), such as 443, 6443, 8443 etc.

Connecting to the API Server Through its Endpoint(s)

You can find the API server endpoints by running:

$ kubectl cluster-info

Kubernetes master is running at https://192.168.99.100:8443

...In the example above the API server is exposed on a private IP address (RFC 1918) which is, by definition, inaccessible from the Internet. Clusters in the cloud often expose their API server publicly to simplify administrative access.

Open a browser and enter the address of the API server. You should receive this response:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

}This means that you are not authorized to access the API server because it doesn’t know who you are. This is good, otherwise anyone could manipulate your cluster.

You can use kubectl as a proxy to authenticate to your API server:

$ kubectl proxy

Starting to serve on 127.0.0.1:8001Point the browser to http://127.0.0.1:8001/ and you will see a list of the supported Kubernetes APIs.

How Pods Connect to the API Server

Pods in the cluster may also access the API server, typically for monitoring, management or security purposes.

Pods don’t need to know the external address of the API server; instead, they can use a special Kubernetes service in the default namespace called “kubernetes”.

$ kubectl describe service kubernetes

Name: kubernetes

Namespace: default

Labels: component=apiserver

provider=kubernetes

Annotations: <none>

Selector: <none>

Type: ClusterIP

IP: 10.96.0.1

Port: https 443/TCP

TargetPort: 8443/TCP

Endpoints: 192.168.99.100:8443

Session Affinity: None

Events: <none>As you can see, this is a special service which doesn’t have a pod selector. Pods can connect to the API server through this service. They connect to host name kubernetes.default.svc and Kubernetes redirects them to the API server endpoints by applying destination NAT (DNAT).

Pods may also connect to the API server with kubectl proxy or directly.

Restricting Network Access to the API server

If your API server is accessible from the Internet, you should ask yourself if this is really needed. You can reduce the attack surface by restricting access to specific address ranges.

Some of the managed Kubernetes services also have the ability to run a private cluster: EKS, GKE (AKS not yet).

Inside the cluster, you should restrict access to the API server to the pods that need to access it. To do that you need to follow three steps:

- Decide which pods require access to the API server — you can see this with Tufin Orca:

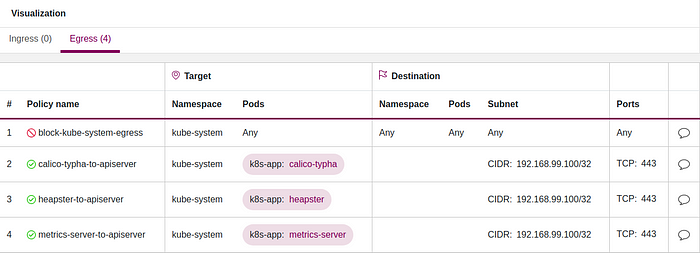

2. Define a Kubernetes egress network policy using IPBlock that allows access to the API server endpoints. For example, this policy allows just three services in kube-system to access the API server (calico-typha, heapster and metrics-server):

3. Apply the network policy:

kubectl apply -f restrict-apiserver.yamlSummary

The Kubernetes API server is the brain of your Kubernetes cluster. You should restrict access to it to the absolute minimum, limiting access from outside of the cluster to the API server using standard networking and firewalling mechanisms, and from within the cluster using a Kubernetes network policy.